The digital revolution is here, and data is taking over. Human existence is being condensed, chronicled, and calculated, one bit at a time, in our servers and tapes. From several perspectives this is a good thing, as it allows us to take information that would have previously filled warehouses with paper, and perform calculations that would have taken warehouses of mathematicians, and consolidate these functions into a neat little box. But there’s a hidden cost to all of this. For every tweet, photo, or blog post, there is a physical manifestation somewhere in the world. It’s so tiny you can’t see it, but when you add these little nothings up by the trillions, they start to take shape in the form of miles of server farms and storage racks.

The digital revolution is here, and data is taking over. Human existence is being condensed, chronicled, and calculated, one bit at a time, in our servers and tapes. From several perspectives this is a good thing, as it allows us to take information that would have previously filled warehouses with paper, and perform calculations that would have taken warehouses of mathematicians, and consolidate these functions into a neat little box. But there’s a hidden cost to all of this. For every tweet, photo, or blog post, there is a physical manifestation somewhere in the world. It’s so tiny you can’t see it, but when you add these little nothings up by the trillions, they start to take shape in the form of miles of server farms and storage racks.

Here’s the real cost: these things use a lot of energy! Servers need power to process our data, and this power creates heat, which has to be dealt with to keep the servers working. As servers increase in density, and we can do even more things inside our neat little boxes, their heat output becomes immense and they require specialized systems to efficiently reject this heat.

In 2013, data centers in the U.S. consumed an estimated 91 billion kilowatt-hours1 of electricity. Per the U.S. Energy Information Administration2, that’s about 7% of total commercial electric energy consumption. This number is only going to go up. By 2020, it is estimated consumption will increase to 140 billion kilowatt-hours1, costing about $13 billion in power bills.

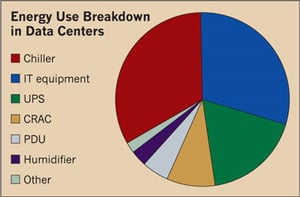

So where does this energy go? As I said before, the servers themselves need power to do their thing, but the cooling system consumes a lot of energy as well. In cutting-edge data centers, the cooling system uses a fraction of the energy of the servers. But in less efficient data centers, cooling systems can use just as much or even more power than the IT equipment.

Lucky for us, we have a handy metric to capture just how efficiently a data center is operating. It’s called Power Usage Effectiveness (PUE), and it’s used to measure how much additional energy is consumed in a data center that’s not directly used by IT equipment. PUE is calculated as the total energy of the facility divided by the IT equipment energy. The higher the PUE, the less efficient that data center is performing.

Operators are (or should be) constantly striving to reduce this number, because every dollar spent on energy is one less dollar that can be spent on operations. The best data centers in the world are pushing this number very close to 1.0, which is ideal efficiency. The reality is that most existing data centers are nowhere close to this holy grail. In fact, a survey by Digital Realty Trust in 20133 revealed the average PUE of large data centers to be a shocking 2.9! In plain terms, this means that for every dollar spent on IT power, almost two dollars are spent to keep the IT housed and comfortable. With modern technology, we can do so much better!

PUE is somewhat climate-based. It’s not apples-to-apples to compare a data center’s performance in Dubai to one in Helsinki. But by and large, a data center’s inefficiencies are a result of poor design, operations, or maintenance of the cooling system. It’s very common to walk through a data center and see too many fans spinning and airflow wasted. Further, many existing data centers still operate under old notions that server rooms must be so cold you need a parka to walk through them. Recent guidance from ASHRAE4 has taught us that servers are much tougher than we used to think, but designers and operators alike are still catching onto this.

Optimizing Data Center Airflow

There are a lot of components to your average data center’s cooling system. There need to be mechanisms to not only pick up the heat from the servers, but to carry it out of the building. These include computer-room air conditioners (CRACs), chillers, condensers, cooling towers, and so on. The point is there is not simply one line of attack for reducing a data center’s PUE.

The primary line of attack, in my view, should always be the energy expended to remove the heat from the servers. This is always done through fluid exchange. As server farms become more and more dense, oil- and water-cooled servers are becoming increasingly prevalent. For the present and near future, however, air remains the standard for most data centers.

The best air-cooled data centers circulate just enough air to accomplish the primary goal of keeping the servers happy. Contrary to what it may seem to some, there is some serious energy cost to moving air around a data center. Data center air-conditioners are far from box fans! They have high-power motors and use some serious juice. Not only that, but circulating too much air can greatly reduce the effectiveness of the heat-rejection portion of the cooling plant.

As such, optimizing the airflow of a data center becomes mission #1 in keeping PUE under control. With ideal air circulation, fans are running at their most efficient point and it becomes much easier to tackle efficiency on the heat-rejection side (chillers, condensers, cooling towers, etc…).

With a small server room, designing for efficient airflow is a matter of simple best-practices. But in a 10,000 square foot data center with hundreds of server racks, it gets a whole lot more complicated. Airflow networks in large, underfloor air systems are very complex and can be very tricky to balance with simple best practices.

This is where advanced analysis is absolutely critical.

In part two of this post, we’ll talk about CFD in data centers.

Image data source: http://ecmweb.com/energy-efficiency/data-center-efficiency-trends

1.http://www.nrdc.org/energy/data-center-efficiency-assessment.asp